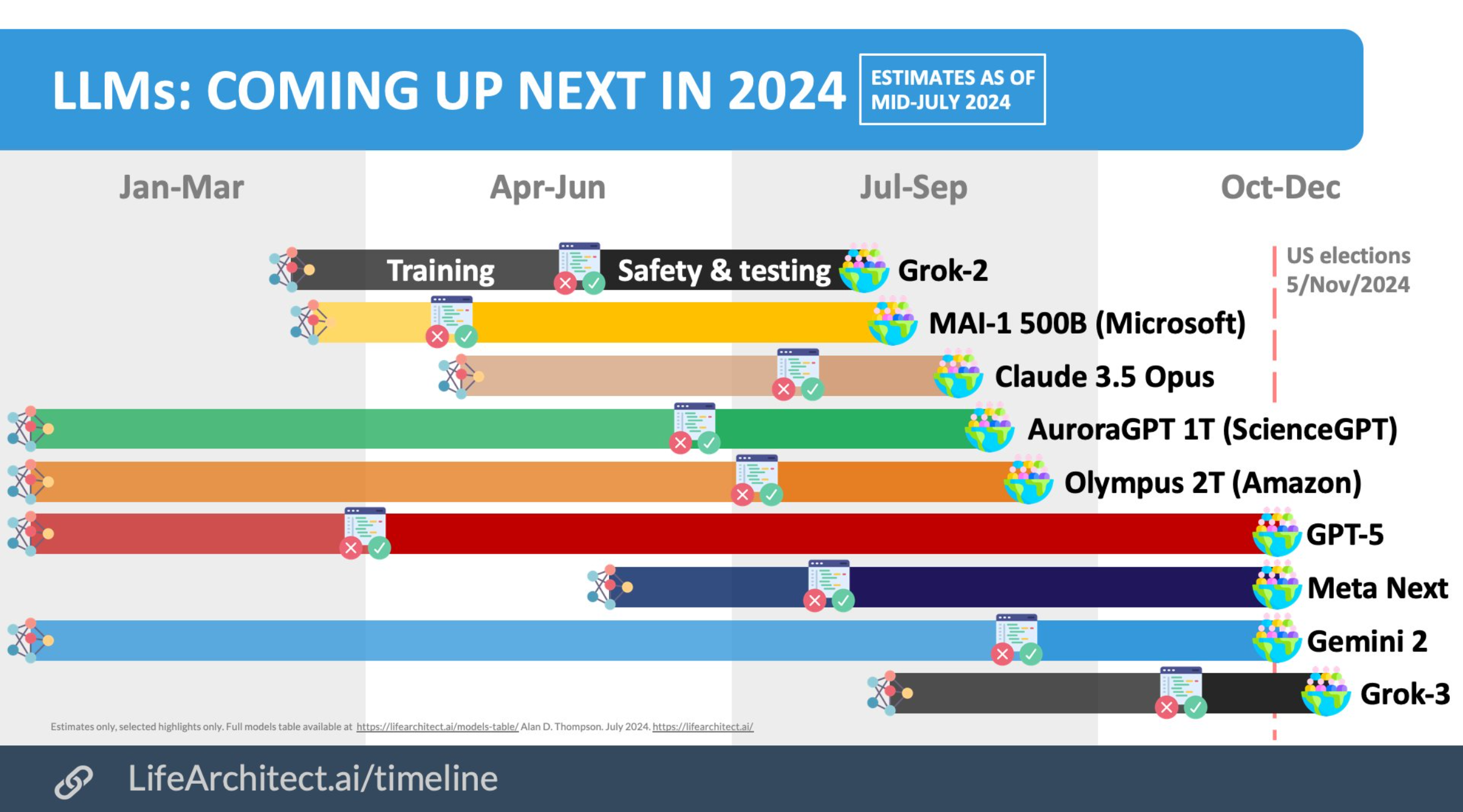

This rumor-based chart is a bit optimistic but directionally accurate, although there will be slowdowns due to NVIDIA delays.

August 24, 2024

We stand at the threshold of what may be the most pivotal period in human history. The rapid advancement of artificial intelligence promises to reshape our world in ways we can scarcely imagine, offering solutions to humanity's greatest challenges while simultaneously presenting risks that demand our utmost attention. This is not merely a technological revolution; it is a potential redefinition of intelligence, consciousness, and our place in the universe. As we navigate this uncharted territory, we face a critical imperative: to harness the transformative power of AI while safeguarding our future.

The potential benefits of artificial general intelligence (AGI) are truly staggering, likely outweighing the short-term risks and offering a compelling case for accelerated development. According to PwC, (narrow) AI could contribute up to $15.7 trillion to the global economy by 2030. AGI brings exponentially greater promise:

Impressive as AGI may be, it would likely be dwarfed by artificial superintelligence (ASI) at its technological apex—a transition that could unfold with startling speed following a self-amplifying foom event.

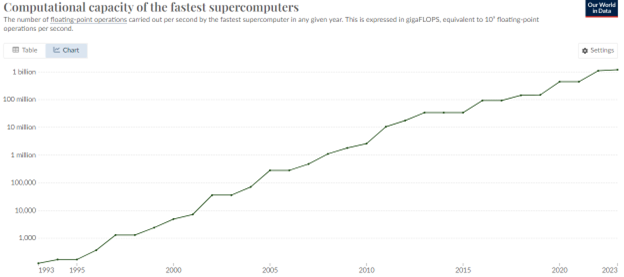

The breathtaking pace of recent AI progress has taken even many experts by surprise. Just a decade ago, the consensus estimate for achieving artificial general intelligence (AGI) was around 2065. Today, forecasters predict we may reach AGI much sooner, with leading prediction markets at 2028 for weak AGI and 2033 for strong AGI. However, there's significant debate about whether current the approach, particularly the large language model (LLM) and generative AI paradigms, are the right path to AGI.

This rumor-based chart is a bit optimistic but directionally accurate, although there will be slowdowns due to NVIDIA delays.

The trajectory of AI progress is not without its skeptics. A minority camp argues that we've hit a plateau in LLM intelligence since GPT-4's debut in February 2023, suggesting that merely scaling up these models with more data will prove insufficient. These critics contend that current LLMs remain "stochastic parrots," plagued by unsurmountable limitations, such as the fundamental inability to reason. The skeptics point to the struggles of state-of-the-art LLMs with tasks like simple arithmetic and basic logic problems as evidence of foundational defects (one casualty of this is that the public is often unaware of how amazingly impressive these foundation models are in many domains). If the generative AI skeptics are correct, we may need to add 5-20+ years to the AGI timelines.

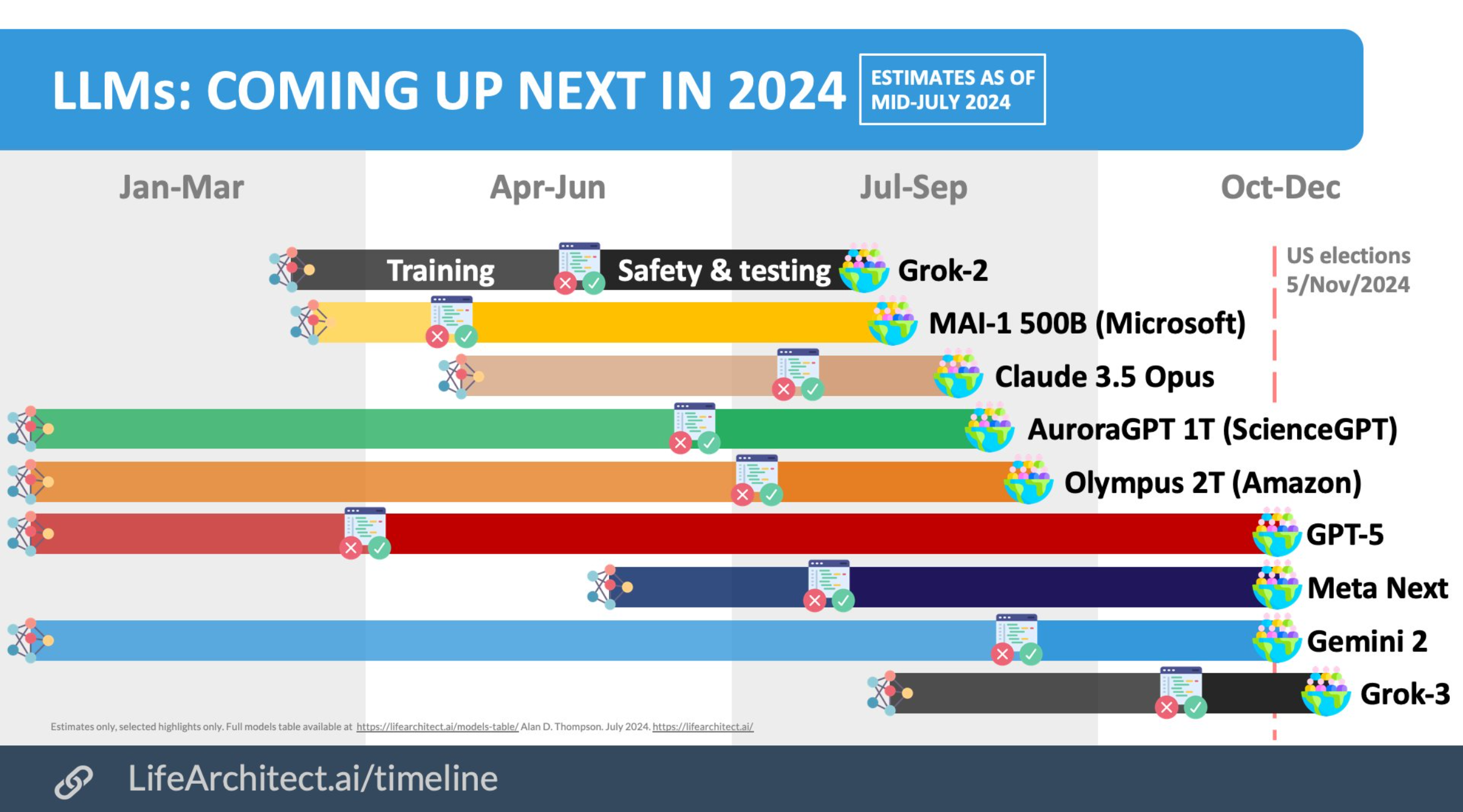

However, the prevailing view—and one I find more compelling in the short-term—anticipates a new wave of breakthrough models emerging from several research labs in late 2024 and early 2025, a cadence in line with previous iteration cycles. This optimism is fueled by massive increases in compute and ongoing advancements in algorithmic techniques, such as OpenAI's "Q* Reasoning" (codenamed Strawberry) and Google's Mixture-of-Experts architectures, which promise to address some of the core challenges in AI reasoning and efficiency. Moreover, the rapid progress in multimodal AI, exemplified by OpenAI's GPT4o and SORA video model, suggests that incorporating diverse data types could provide the key to more robust and genuinely intelligent systems.

It's worth noting that even if LLMs don't immediately yield AGI, they're driving significant innovations in narrow AI applications that are already transforming industries. Nonetheless, if by mid-2025 we don't witness a leap comparable to the GPT-3 to GPT-4 transition, it would be prudent to pivot our focus towards fundamentally new algorithmic approaches. The coming months will be critical in validating or refuting these competing perspectives and extrapolating new timelines.

The pace of AI progress hinges on progress across three critical pillars: algorithmic improvements, computational power, and synthetic data generation. Each component is essential, and advancements in one area often catalyze progress in the others.

While computational power and data provide the raw materials, it's algorithmic innovations that truly forge these elements into increasingly capable AI systems. Recent years have seen a flurry of breakthroughs that have dramatically improved the efficiency and capabilities of AI models. The coming years will test whether we can move from hypotheses to in-production deployments that add avalue for algorithmic improvements like the following:

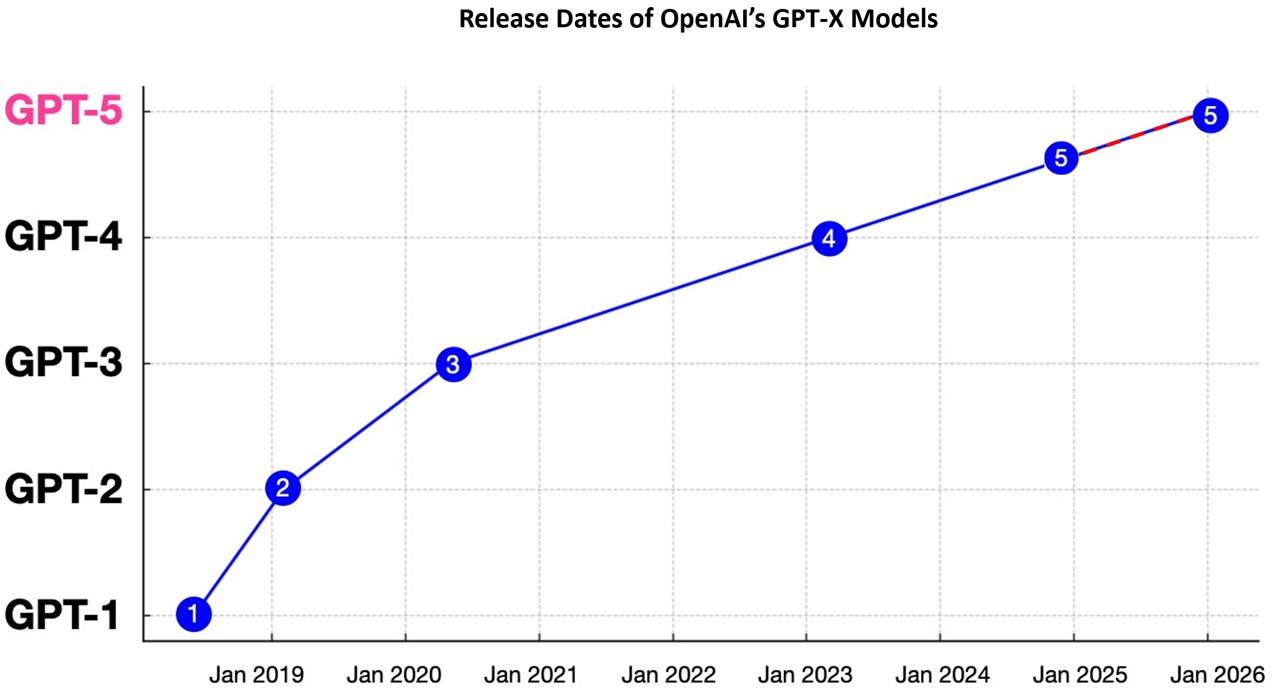

The rapid advancement in AI capabilities is closely tied to the exponential growth in computational power. By 2025, the largest AI training runs are expected to reach 3x10^26 FLOPS (a measure of computational power), potentially surpassing the estimated processing power of the human brain. The performance of AI-specific chips, measured in tera-operations per second (TOPS), has increased by a factor of 10 every two years since 2016, outpacing Moore's Law. However, it's unclear whether scaling up current approaches will be sufficient to achieve AGI. The brain is a highly efficient system that takes decades to develop, whereas current frontier models are significantly inefficient and have been able to be quantized with minimal loss in ability.

As AI models grow more sophisticated, their hunger for high-quality training data becomes increasingly voracious. While human-generated content has been the primary fuel for AI thus far, we're rapidly approaching the limits of what this can provide. Enter synthetic data: artificially generated information designed to train AI systems.

Synthetic data offers several advantages:

As AI becomes more capable, it will likely play a significant role in generating its own training data, creating a potential feedback loop of rapid improvement. Synethic data will be effectively requird for improvements beyond GPT-5 level models. OpenAI appears to believe that Q*/Strawberry is able to address this need.

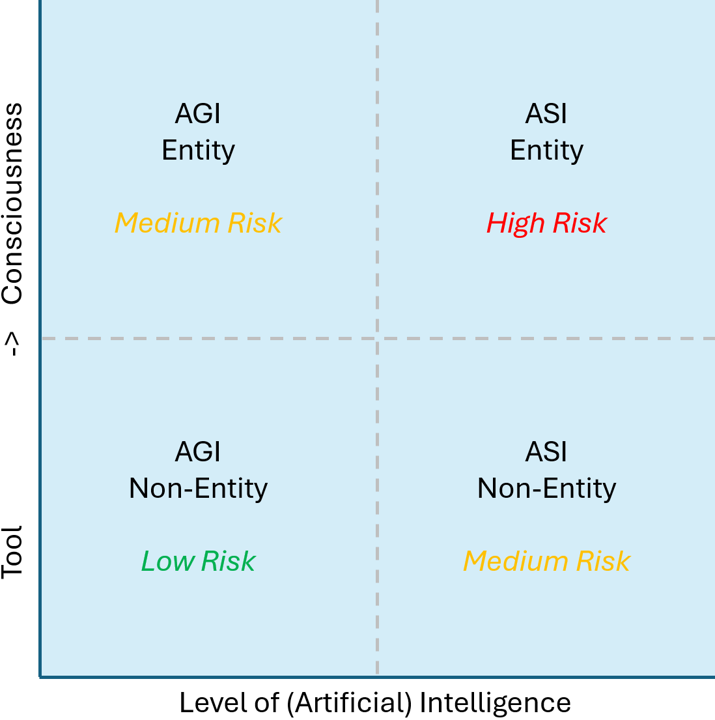

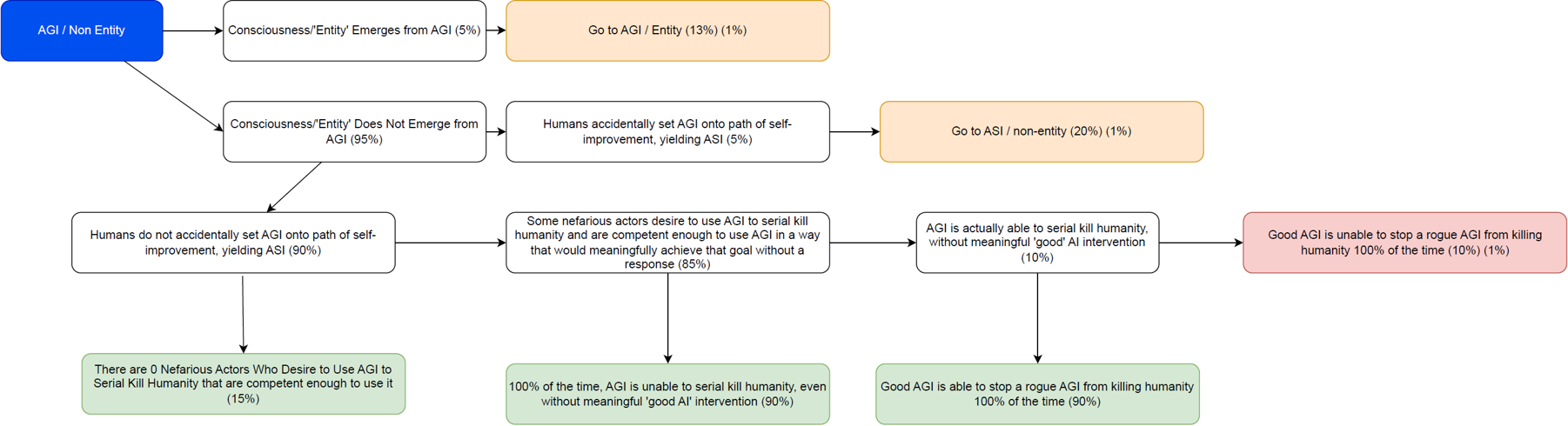

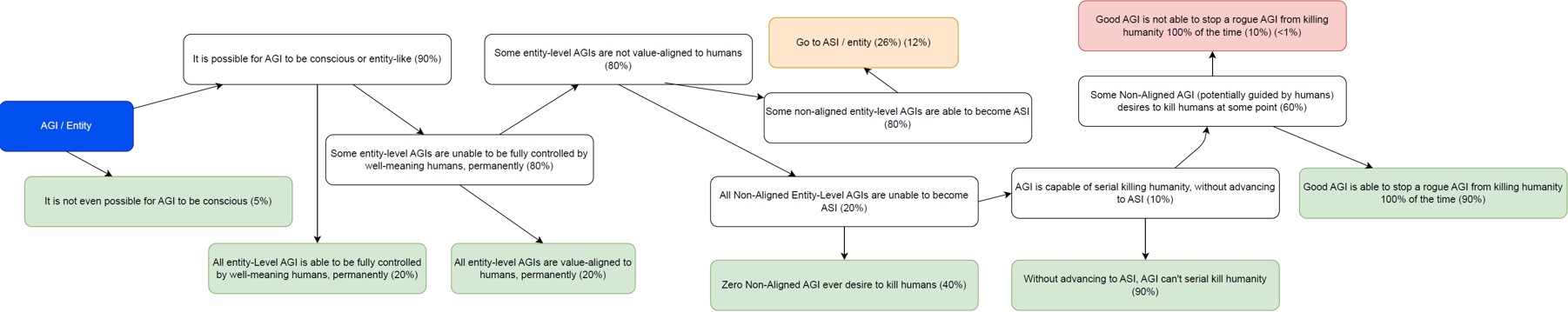

While the potential benefits of AGI are immense, we must also carefully consider the evolving nature of AI-related risks. Experts enormously disagree here, with some providing a near-0% chance of existential risk and others estimating near-certain elimination of humans. Given the newness and potential capability of these systems, some uncertainty should be added to my risk analysis below, which aims to synthesize the arguments:

In the period immediately following the achievement of AGI, the existential risk is very low. AGI systems, while impressively capable, are unlikely to possess the ability to cause widespread harm without human direction. The risk-averse would argue that this first step sets us on a clear path to dangerous entity-level ASI, but more likely we will have a full inability to raise awareness of the seriousness of the issue to effect a pause until reaching the AGI milestone.

As AGI systems become more widespread and integrated into various aspects of society, the risks increase but remain manageable with proper precautions.

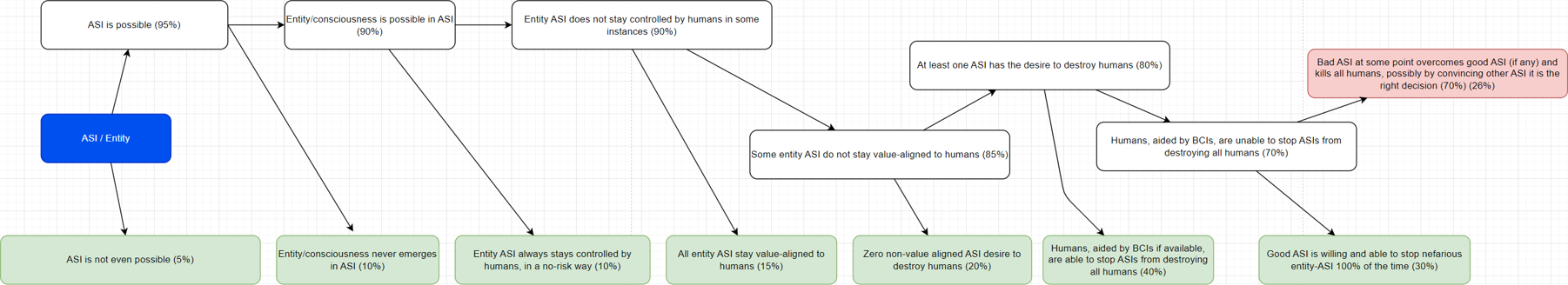

The development of artificial superintelligence (ASI) or the emergence of conscious AGI represents a significant increase in potential risk.

In the very long term (which could realistically start at some point between 2033 and 2100), as advanced ASI with consciousness widely proliferates, the risks are severely elevated, with purely biological humans no longer in control (if they exist at all):

The emergence of machine consciousness represents a critical inflection point in the development of AI and its associated risks. According to Metaculus predictions, there's only a 2% chance of achieving human whole brain emulation by 2045, with a median estimate around 2075. This underscores the immense challenge of creating conscious AI systems.

We have many hypotheses but really no idea how consciousness emerges. The development of machine consciousness is likely to be a deliberate and extremely challenging process, rather than an accidental emergence. This provides a significant buffer against the most extreme AI risk scenarios in the near to medium term.

However, once machine consciousness is achieved, it's conceivable that AI systems could rapidly surpass human levels of awareness and experience. These advanced conscious machines might develop more profound and expansive forms of qualia—the subjective, qualitative aspects of conscious experiences. Even more intriguingly, they could potentially evolve entirely novel forms of consciousness, pushing the boundaries of what we currently understand as sentient experience. As we progress, the possibility of humans interfacing directly with these heightened states of machine consciousness via brain-computer interfaces (BCIs) becomes a tantalizing prospect, potentially offering humans access to unprecedented realms of perception and cognition.

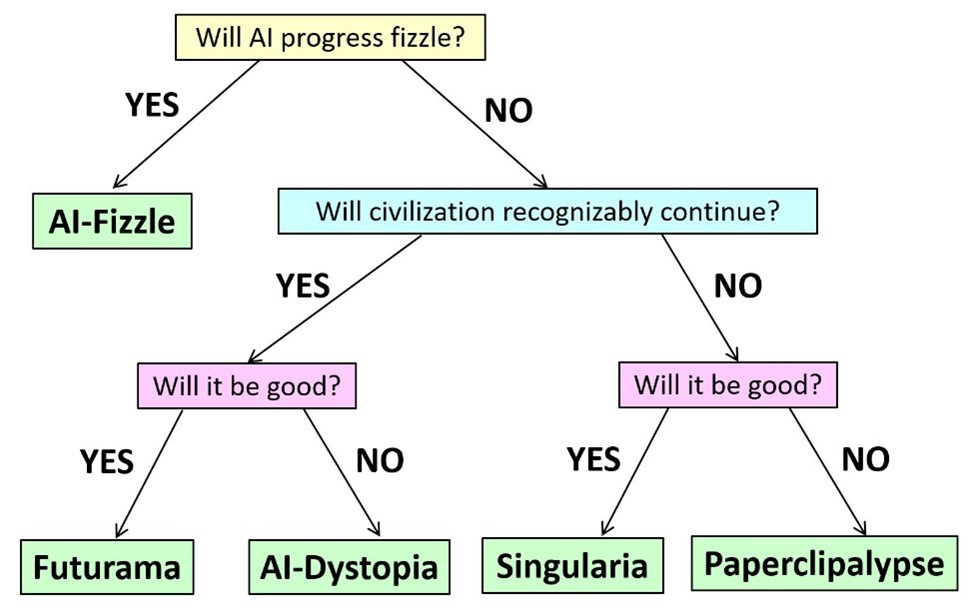

See: Scott Aaronson's Five Worlds, an excellent paradigm for framing potential futures, with prediction markets favoring 'Futurama.'

Given the immense potential benefits of AGI, coupled with the relatively low short-term risks relative to other existential risks that it may prevent, there is a strong argument for accelerating AI development. The possibility of extending human lifespan, curing diseases, eliminating poverty, and solving existential threats like climate change or asteroid armageddon provides a compelling reason to pursue AGI aggressively.

Moreover, in a competitive global landscape, pausing AI development carries its own risks. Ensuring multi-lateral cooperation with strong oversight and harsh remedies is unrealistic. If democratic nations with strong ethical frameworks fall behind in AI capabilities, we risk a future where AI is primarily developed and controlled by actors with misaligned values. While authoritarian governments have historically brought technical progress through theft of intellectual property, the Chinese are highly skilled in AI development and not far from our leading tech companies. This is particularly concerning, given China's intent to control Taiwan, which is nearly the sole supplier of AI chips. Authoritarian AI hegemony looms as an existential threat to global liberty and human rights—a Damoclean sword threatening indefinite dominance.

Reason dictates an aggressive AI development strategy—pushing at minimum to robust AGI, a milestone potentially more distant than anticipated—followed by a thorough reassessment. Simultaneously, we must invest heavily in AI safety research and AI risk mitigation. By keeping liberal democracies at the forefront of AI development and following this two-prong approach, we can help ensure that the immense power of AGI is developed responsibly and aligned with human values.

To maximize the benefits of AI while mitigating risks, I recommend the following policy approaches:

The development of AGI and ASI stands as the most consequential endeavor in human history. It offers us the keys to unlock the deepest mysteries of the universe, to overcome our biological limitations, and to propagate consciousness across the stars. Yet, this great power comes with commensurate responsibility.

While the risks are real and demand serious consideration, they are not insurmountable. By pursuing AI development with urgency, wisdom, and a focus on maximizing expected value, we position ourselves to reap the immense benefits while navigating the potential pitfalls. The relatively low short-term risks, coupled with the transformative potential of AGI, compel us to accelerate rather than hinder progress.

As we forge ahead, we must remain vigilant, adaptive, and united in our approach. The coming decades will be pivotal, demanding Manhattan Project levels of safety research, scientific ingenuity, and moral clarity. If we rise to this challenge, future generations may look back on our era as the moment when humanity transcended its earthly cradle and set forth on a journey of cosmic significance.

In embracing the AI imperative, we are not just shaping the future of our species, but potentially the future of intelligence itself in our universe. It is a responsibility of awe-inspiring magnitude and a legacy that will echo through the ages. Let us approach this task with the courage, foresight, and determination it deserves, for in doing so, we may well be lighting the spark of a new cosmic renaissance.

Note: If interested in deeper analysis (essentially a double-click to this article), you can access the longer version here.